|

Medical Imaging Interaction Toolkit

2024.12.99-d991a09f

Medical Imaging Interaction Toolkit

|

Segmentation is the act of separating an image into foreground and background subsets by manual or automated delineation, while the foreground is defined to be part of the segmentation. Such a segmented image subset is also called a label as it typically labels a specific region of interest. A multilabel segmentation may contain multiple labels organized in distinct groups. You can create multiple labels for different regions of interest contained within a single segmentation image. Labels in the same group cannot overlap each other but labels from different groups may overlap.

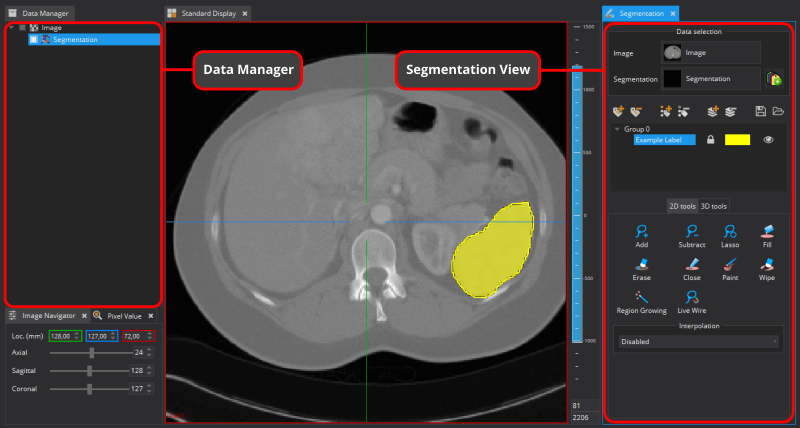

The MITK Segmentation Plugin allows you to create multilabel segmentations of anatomical and pathological structures in medical images. The plugin consists of three views:

In this user guide, the features of the Segmentation View are described. For an introduction to the Segmentation Utilities or Segmentation Task List, refer to the respective user guides.

The Segmentation View has a few prerequisites regarding segmentations and their reference image:

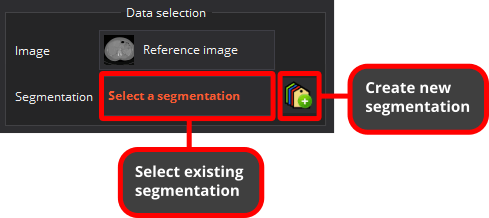

To select a reference image for a new segmentation, click on the Image widget in the Data selection section at the very top of the Segmentation View. Choose an image from the displayed list of Data Manager images. Once an image is selected, a new segmentation for this reference image can be created by clicking the button right next to the Segmentation widget in the Data selection section. A new multilabel segmentation with an initial, empty label is automatically generated if not set otherwise in the preferences. The new segmentation will be added to the Data Manager as a child node of its reference image node. It is automatically selected and can be edited in the Segmentation View right away.

Instead of creating a new segmentation, an existing segmentation can be selected and edited as well. The selection list of existing segmentations for a certain reference image consists of matching/congruent segmentations only.

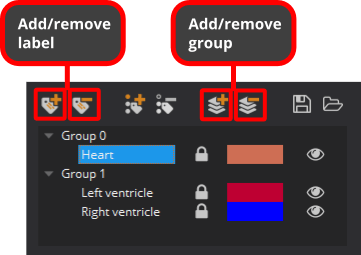

Segmentation images consist of at least a single group called "Group 0" in which the first default label is created. More groups can be added and removed but there will always be at least a single group. Labels of the same group cannot overlap each other. Labels of different groups may overlap each other.

For example, you could segment the whole heart as "Heart" label in "Group 0", add "Group 1" and create multiple labels of the anatomical details of the heart in that group. Naturally, all these labels lie within the extents of the "Heart" label of "Group 0" but in principle they are completely independent of "Group 0". Some pixels are now labelled twice, e.g., as "Heart" and "Left ventricle". Since the labels of "Group 1" cannot overlap each other, it is impossible to accidentally label a pixel as both "Left ventricle" and "Right ventricle".

If you would like to segment even more details you could create "Group 2" to have up to three labels per pixel. Nevertheless, groups are technically a flat data structure and cannot contain nested groups. It is all about possibly overlapping labels from distinct groups and spatially exclusive, non-overlapping labels within the same group.

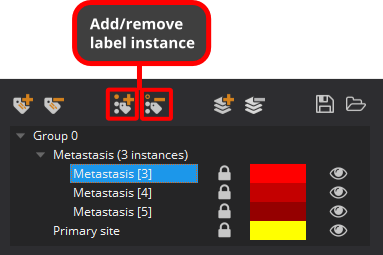

The Segmentation View supports label instances. That is, segmenting multiple distributed entities of the same thing like metastases for example.

A label, as we used the term before, is already a single instance of itself but it may consist of multiple label instances.

If a label consists of multiple label instances, they each show their own distinct pixel value in square brackets as a clue for distinction and identification.

It is important to understand that this number is not a separate, consecutive index for each label. It is just the plain pixel value of the label instance, which is unique across all label instances of the whole segmentation.

Label instances are locked by default: label instances from the same group cannot accidentally override pixels from other label instances. Locked label instances behave like cookie cutters for other label instances of the same group. You can unlock label instances to remove that protection from other label instances of the same group. Their pixel contents can then be overridden by other label instances of the same group.

Remember that label instances from distinct groups do not interact with each other. They can always overlap (not override) each other.

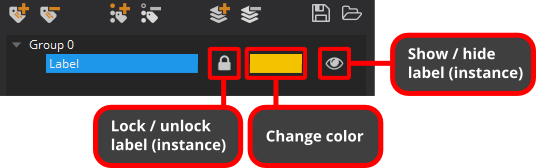

You can also change the color of label instances as well as show (default) or hide their pixel contents. The icons at the right side of the rows of the groups and labels widget reflect their state in all these regards.

Renaming of labels and label instances can be found in their content menu as shown further below.

Especially if you have segmentations with many label instances or the label names are not telling, it can be nontrivial to identify the label instance in the label inspector of the segmentation view. To mitigate this problem MITK uses label highlighting in the render windows. As long as you hover with the mouse cursor over a group, label or label instance, the respective label instances will be highlighted in the render windows. Highlighted labels will visually pop out by being shown with full opacity (no transparency) while the opacity of all non-highlighted labels of the same segmentation will be reduced to 30% of the current opacity value (they become very transparent).

By default, label instances that are set to be invisible are not shown while highlighted. By pressing the shift key, one can enforce also invisible label instances to be shown while highlighting.

Remark: the highlighting is supported in all views that use the label inspector (e.g. also the segmentation utilities).

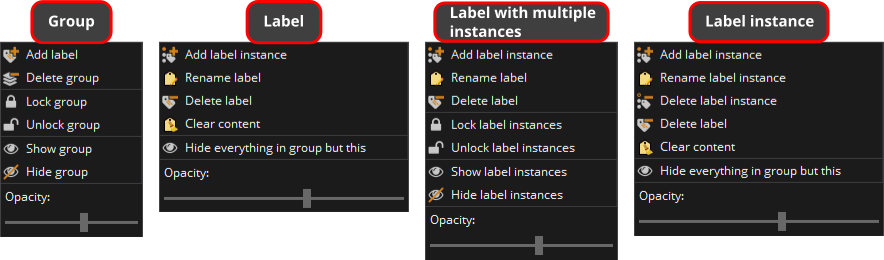

Actions for organization of groups, labels, and label instances (as well as other operations) can be also found in their right-click context menus.

Most actions available in these context menus are self-explanatory or were already described above by other means of access like the tool button bar for adding and removing groups, labels, and label instances.

Labels and label instances can be renamed, while groups have fixed names. Note that renaming a label instance will make a separate label out of it, since all instances of the same label share a single common name.

Clear content only clears the pixels of a label instance but won't delete the actual label instance.

Groups can be locked and unlocked as a whole from their context menu, while label instances can be directly locked and unlocked outside the context menu as described further below.

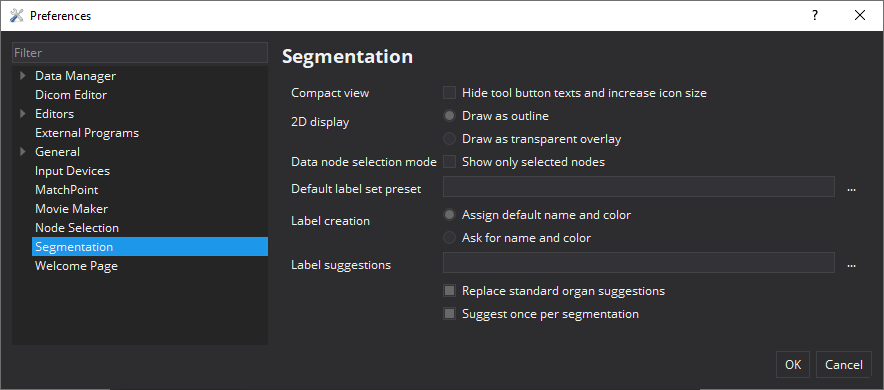

When renaming label instances or creating new label instances with enforced manual naming in the Segmentation preferences, entering names is supported by auto-completion for common label names. The list of predefined label names and colors for the auto-completion feature can be either extended or replaced by a custom list of label name and color suggestions. This custom list must be specified as a JSON file, just containing an array of objects, each with a mandatory "name" string and an optional "color" string. The JSON file can be set in the Segmentation preferences as well as a few options on how to apply these suggestions.

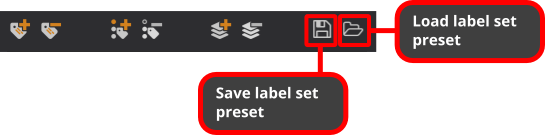

Label set presets are useful to share a certain style or scheme between different segmentation sessions or to provide templates for new segmentation sessions.

The properties of all label instances in all groups like their names, colors, and visibilities are saved as a label set preset by clicking on the 'Save label set preset' button. Label set presets are applied to any segmentation session by clicking on the 'Load label set preset' button. If a label instance for a certain value already exists, its properties are overridden by the preset. If a label instance for a certain value does not yet exist, an empty label instance with the label properties of the preset is created. The actual pixel contents of label instances are unaffected as label set presets only store label properties.

If you work on a repetitive segmentation task, manually loading the same label set preset for each and every new segmentation can be tedious. To streamline your workflow, you can set a default label set preset in the Segmentation preferences (Ctrl+P). When set, this label set preset will be applied to all new segmentations instead of creating the default red "Label 1" label instance.

If you work on a repetitive segmentation task, manually loading the same label set preset for each and every new segmentation can be tedious. To streamline your workflow, you can set a default label set preset in the Segmentation preferences (Ctrl+P). When set, this label set preset will be applied to all new segmentations instead of creating the default red "Label 1" label instance.

The Segmentation Plugin offers a number of preferences which can be set via the MITK Workbench application preferences (Ctrl+P):

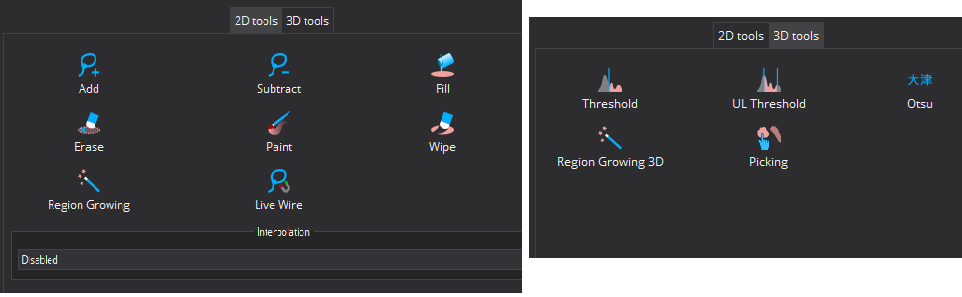

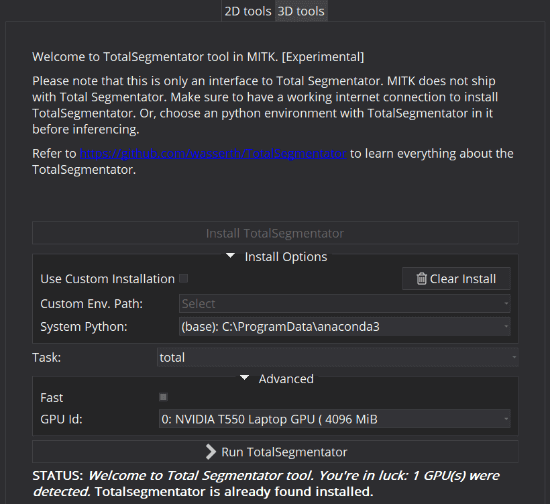

MITK offers a comprehensive set of slice-based 2D and (semi-)automated 3D segmentation tools. The manual 2D tools require some user interaction and can only be applied to a single image slice whereas the 3D tools operate on the whole image. The 3D tools usually only require a small amount of user interaction, i.e. placing seed points or setting / adjusting parameters. You can switch between the different toolsets by selecting the 2D or 3D tab in the segmentation view.

With 2D manual contouring you define which voxels are part of the segmentation and which are not. This allows you to create segmentations of any structures of interest in an image. You can also use manual contouring to correct segmentations that result from sub-optimal automatic methods. The drawback of manual contouring is that you might need to define contours on many 2D slices. However, this is mitigated by the interpolation feature, which will make suggestions for a segmentation.

To start using one of the editing tools, click its button from the displayed toolbox. The selected editing tool will be active and its corresponding button will stay pressed until you click the button again. Selecting a different tool also deactivates the previous one.

If you have to delineate a lot of images, shortcuts to switch between tools becomes convenient. For that, just hit the first letter of each tool to activate it (A for Add, S for Subtract, etc.).

All of the editing tools work by the same principle: using the mouse (left button) to click anywhere in a 2D window (any of the orientations axial, sagittal, or coronal), moving the mouse while holding the mouse button and releasing the button to finish the editing action. Multi-step undo and redo is fully supported by all editing tools by using the application-wide undo / redo buttons in the toolbar.

Remark: Clicking and moving the mouse in any of the 2D render windows will move the crosshair that defines what part of the image is displayed. This behavior is disabled as long as any of the manual segmentation tools are active - otherwise you might have a hard time concentrating on the contour you are drawing.

Use the left mouse button to draw a closed contour. When releasing the mouse button, the contour will be added (Add tool) to or removed (Subtract tool) from the current segmentation. Adding and subtracting voxels can be iteratively repeated for the same segmentation. Holding CTRL / CMD while drawing will invert the current tool's behavior (i.e. instead of adding voxels, they will be subtracted).

The tool is a more advanced version of the add/subtract tool. It offers you the following features:

To start a segmentation double left click where the first ancor point should be. To end the segmentation double left click where the last ancor point should be. Please note that:

Use the Size slider to change the radius of the round paintbrush tool. Move the mouse in any 2D window and press the left button to draw or erase pixels. Holding CTRL / CMD while drawing will invert the current tool's behavior (i.e. instead of painting voxels, they will be wiped).

Click at one point in a 2D slice widget to add an image region to the segmentation with the region growing tool. Region Growing selects all pixels around the mouse cursor that have a similar gray value as the pixel below the mouse cursor. This allows to quickly create segmentations of structures that have a good contrast to surrounding tissue. The tool operates based on the current level window, so changing the level window to optimize the contrast for the ROI is encouraged. Moving the mouse up / down is different from left / right: Moving up the cursor while holding the left mouse button widens the range for the included grey values; moving it down narrows it. Moving the mouse left and right will shift the range. The tool will select more or less pixels, corresponding to the changing gray value range.

Left-click inside a region/segmentation to flood fill all connected pixels that have the same label with the active label. This tool will only work on regions of unlocked labels or on regions that are not labeled at all.

This tool removes a connected part of pixels that form a segmentation. You may use it to remove single segmented regions by left-click on specific segmentation region. This tool will only work and regions of unlocked labels or on regions of the active label.

Left-click inside the region/segmentation to fill all "holes" (pixels labelled with another label or no label) inside the region. Therefore this tool behaves like a local closing operation. This tool will not work, when a non-labeled region is selected and holes of locked labels will not be filled.

The Live Wire Tool acts as a magnetic lasso with a contour snapping to edges of objects.

The tool handling is the same like the Lasso tool (see for more info), except it generates live wire contours instead of straight lines.

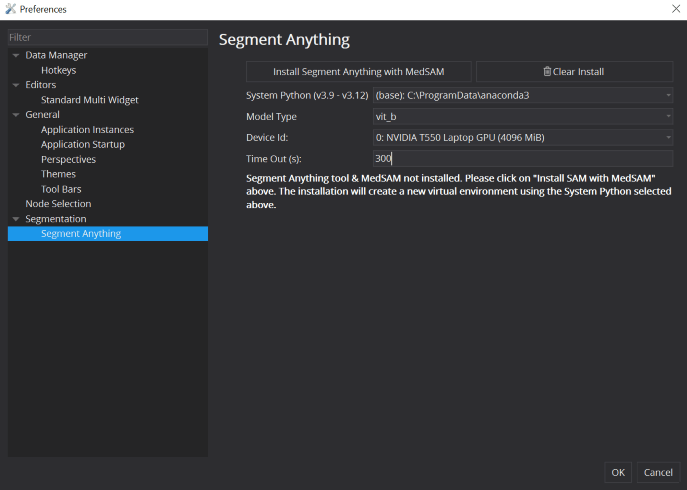

The Segment Anything Tool is a deep learning-based interactive segmentation tool. Originally created by MetaAI, MITK presents this model for medical image segmentation tasks. The tool requires that you have Python 3 installed and available on your machine. Note: On Debian/Ubuntu systems, you need to install git, python3-pip, python3-venv package using the following command: apt install git python3-pip python3-venv. For best experience, your machine should be ideally equipped with a CUDA-enabled GPU. For a detailed explanation of what this algorithm is able to, please refer to https://ai.facebook.com/research/publications/segment-anything/

Any adjustments to the Levelwindow setting impacts the segmentation. However, any applied color maps are ignored.

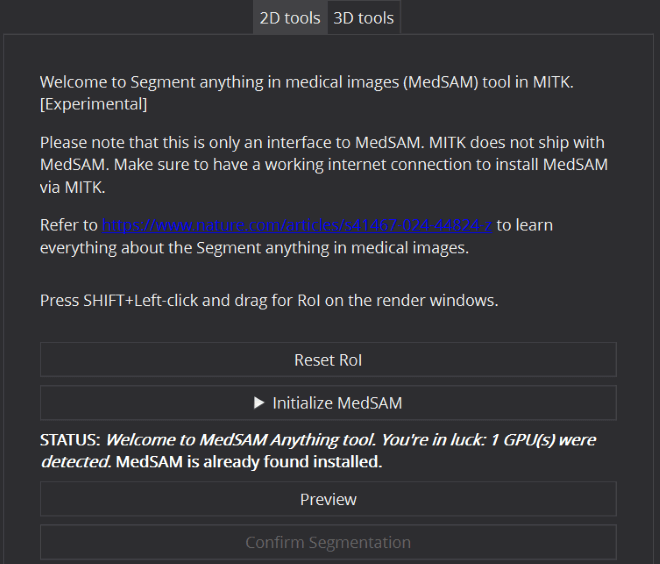

The MedSAM (Segment Anything in Medical Images) tool is a specialization of the the Segment Anything (SAM) tool. A new foundation model in the back end is dedicated to universal medical image segmentation. Just like the Segment Anything tool, the MedSAM tool requires that you have Python 3 installed and available on your machine. Note: On Debian/Ubuntu systems, you need to install the git, python3-pip, and python3-venv packages using the following command: sudo apt install git python3-pip python3-venv. For best experience, your machine should be ideally equipped with a CUDA-enabled GPU. Any adjustments to the Levelwindow setting impacts the segmentation. However, any applied color maps are ignored.

Note: For a detailed explanation of what this algorithm is able to, please refer to https://www.nature.com/articles/s41467-024-44824-z

Read more about the tool here at MONAI Label 3D Tool

Creating segmentations using 2D manual contouring for large image volumes may be very time-consuming, because structures of interest may cover a large range of slices. The segmentation view offers two helpful features to mitigate this drawback:

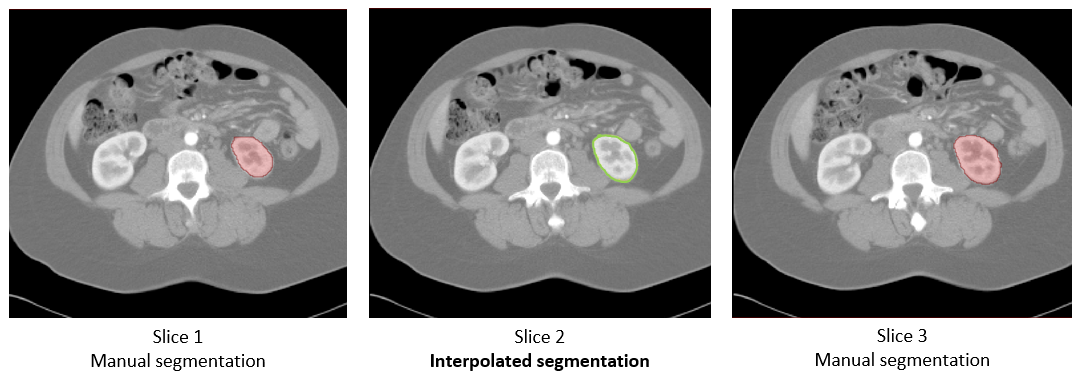

The 2D Interpolation creates suggestions for a segmentation whenever you have a slice that

Interpolated suggestions are displayed as outlines, until you confirm them as part of the segmentation. To confirm single slices, click the Confirm for single slice button below the toolbox. You may also review the interpolations visually and then accept all of them at once by selecting Confirm for all slices.

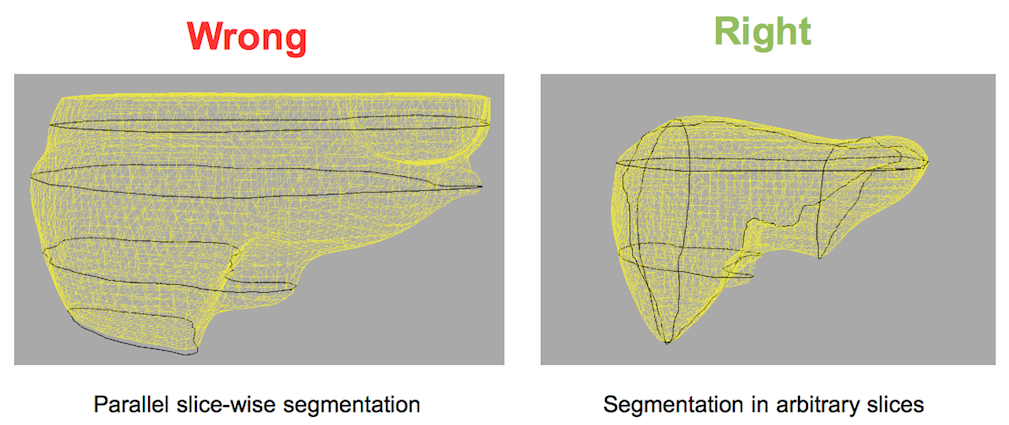

The 3D interpolation creates suggestions for 3D segmentations. That means if you start contouring, from the second contour onwards, the surface of the segmented area will be interpolated based on the given contour information. The interpolation works with all available manual tools. Please note that this is currently a pure mathematical interpolation, i.e. image intensity information is not taken into account. With each further contour the interpolation result will be improved, but the more contours you provide the longer the recalculation will take. To achieve an optimal interpolation result and in this way a most accurate segmentation you should try to describe the surface with sparse contours by segmenting in arbitrary oriented planes. The 3D interpolation is not meant to be used for parallel slice-wise segmentation, but rather segmentations in i.e. the axial, coronal and sagittal plane.

You can accept the interpolation result by clicking the Confirm-button below the tool buttons. In this case the 3D interpolation will be deactivated automatically so that the result can be post-processed without any interpolation running in the background.

Additional to the surface, black contours are shown in the 3D render window, which mark all the drawn contours used for the interpolation. You can navigate between the drawn contours by clicking on the corresponding position nodes in the data manager which are stored as sub-nodes of the selected segmentation. If you do not want to see these nodes just uncheck the Show Position Nodes checkbox and these nodes will be hidden.

If you want to delete a drawn contour we recommend to use the Erase-Tool since undo / redo is not yet working for 3D interpolation. The current state of the 3D interpolation can be saved across application restart. For that, just click on save project during the interpolation is active. After restarting the application and load your project you can click on "Reinit Interpolation" within the 3D interpolation GUI area.

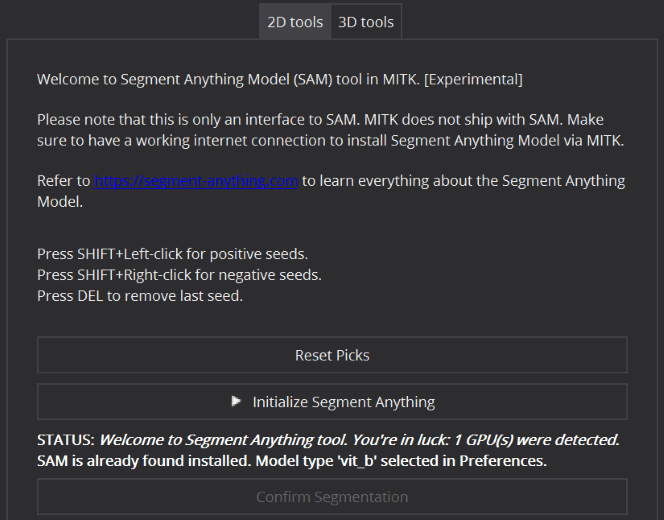

The 3D tools operate on the whole image and require usually a small amount of interaction like placing seed-points or specifying certain parameters. All 3D tools provide an immediate segmentation feedback, which is displayed as a transparent green overlay. For accepting a preview you have to press the Confirm button of the selected tool. The following 3D tools are available:

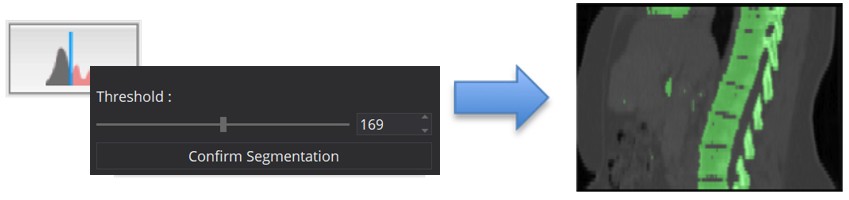

The thresholding tool simply applies a 3D threshold to the patient image. All pixels with values equal or above the selected threshold are labeled as part of the segmentation. You can change the threshold by either moving the slider of setting a certain value in the spinbox.

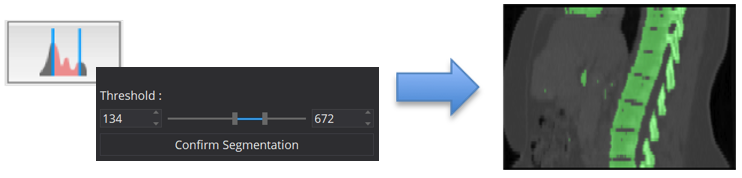

The Upper/Lower Thresholding tool works similar to the simple 3D threshold tool but allows you to define an upper and lower threshold. All pixels with values within this threshold interval will be labeled as part of the segmentation.

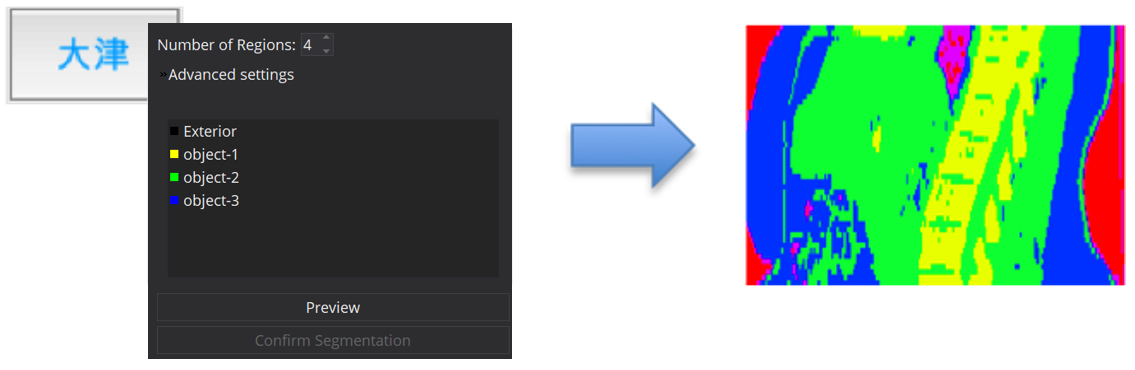

The 3D Otsu tool provides a more sophisticated thresholding algorithm. It allows you to define a number of regions. Based on the image histogram the pixels will then be divided into different regions. The more regions you define the longer the calculation will take. You can select afterwards which of these regions you want to confirm as segmentation.

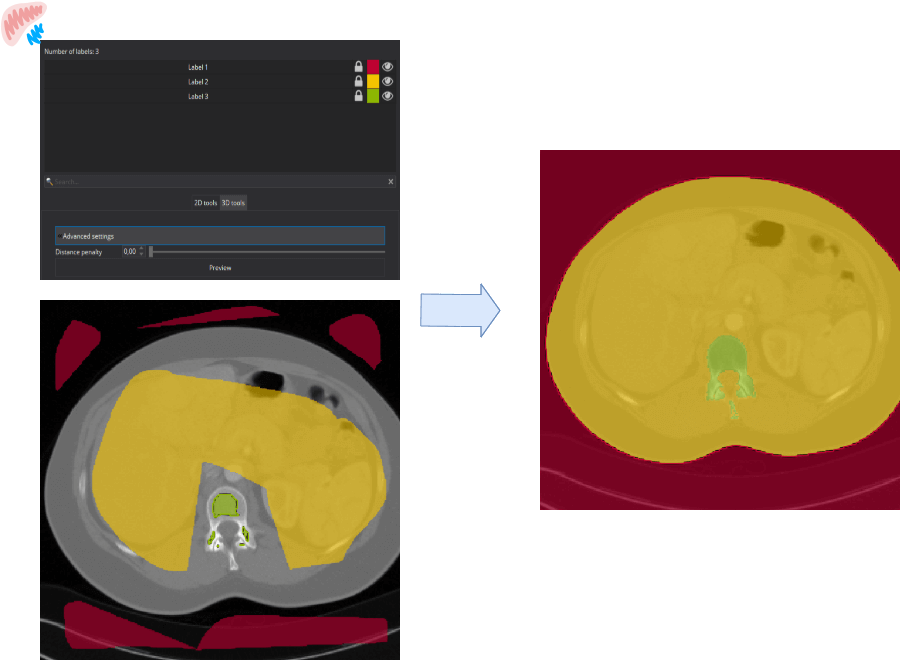

The 3D GrowCut tool uses previously created segmentation labels (e.g. by the "Add"-tool) stored in the segmentation layer 0. The GrowCut tool will use these segmentation labels to create a seedimage that will serve as input to the algorithm. As an advanced setting option, a Distance penalty can be set, which increases the cohesion in the immediate surroundings of the initial labels. Based on the seedimage and the Distance penalty, a growing is started, which includes all areas that are not initially assigned to a specific label. During this process, initially unassigned areas are assigned to the best fitting labels. After the segmentation process, the user can decide which newly generated labels should be confirmed.

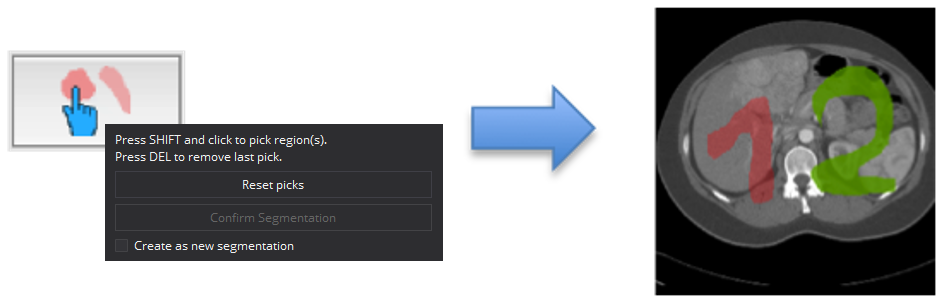

The Picking tool offers two modes that allow you to manipulate "islands" within your segmentation. This is especially useful if e.g. a thresholding provided you with several areas within your image but you are just interested in one special region.

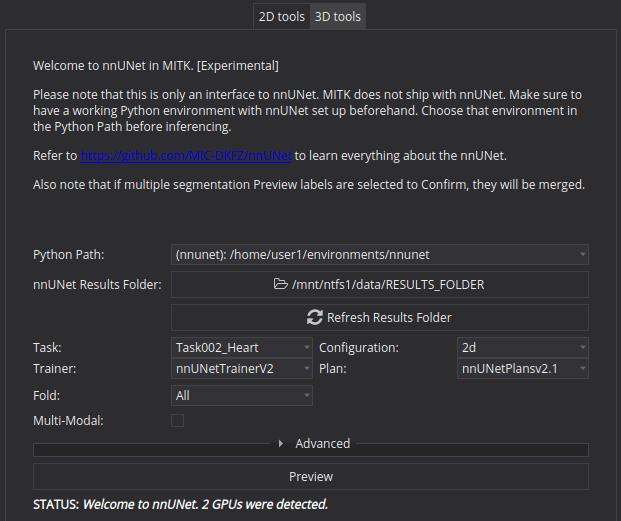

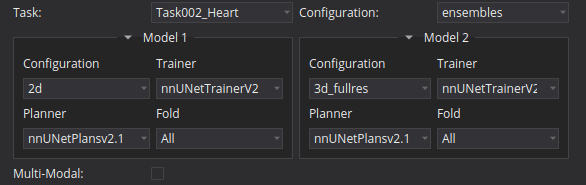

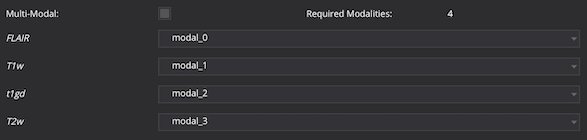

This tool provides a GUI to the deep learning-based segmentation algorithm called the nnU-Net v1. With this tool, you can get a segmentation mask predicted for the loaded image in MITK. Be ready with the pre-trained weights (a.k.a RESULTS_FOLDER) for your organ or task concerned, before using the tool. For a detailed explanation of the parameters and pre-trained weights folder structure etc., please refer to https://github.com/MIC-DKFZ/nnUNet.

Remark: The tool assumes that you have a Python3 environment with nnU-Net v1 (pip) installed. Your machine should be also equipped with a CUDA enabled GPU.

nvidia-smi utility output. In case your machine has Nvidia CUDA enabled GPUs but the nvidia-smi fails for some reason, the "GPU Id" combobox will show no entries. In such a situation, it's still possible to execute inferencing by manually entering the preferred GPU Id, eg. 0 in the combobox.

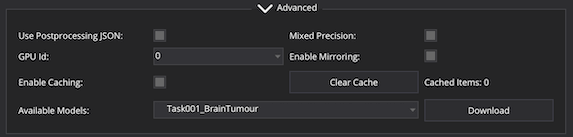

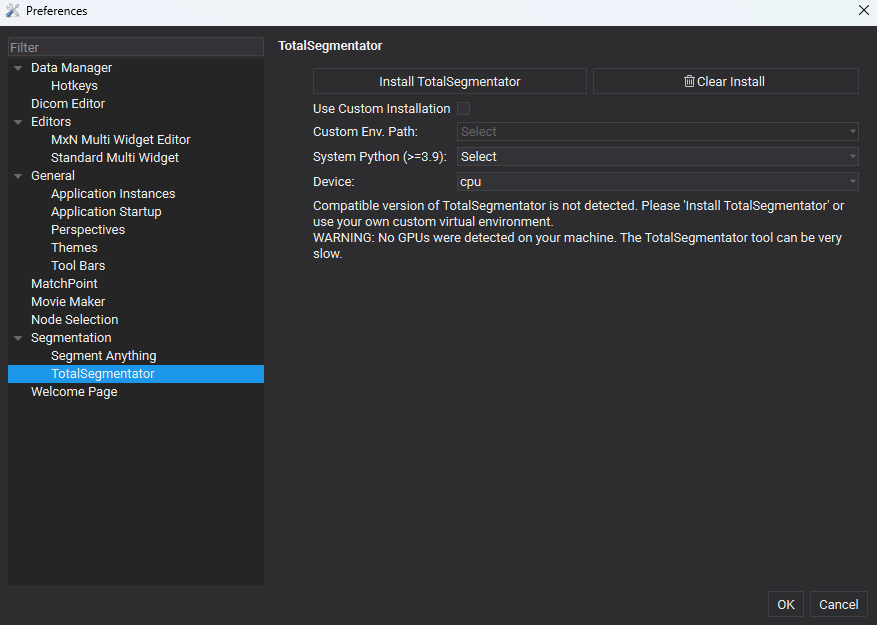

This tool provides a GUI to the deep learning-based segmentation algorithm called the TotalSegmentator (v2). With this tool, you can get a segmentation mask predicted for 117 classes in CT images, loaded in MITK. For a detailed explanation on tasks and supported classes etc., please refer to https://github.com/wasserth/TotalSegmentator

The tool assumes that you have Python >= 3.9 installed and available on your machine. We recommend to install TotalSegmentator via MITK. The "Install TotalSegmentator" action implicitly creates a python virtual environment in an MITK mainitained directory. Note: on Debian/Ubuntu systems, you need to install the python3-venv package using the following command: apt install python3-venv. For best results, your machine should be ideally equipped with a CUDA-enabled GPU.

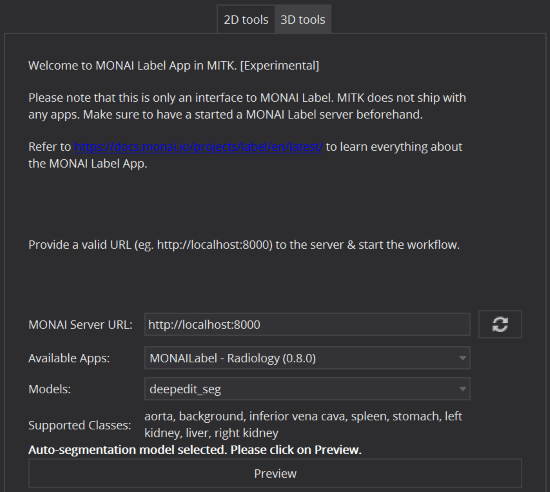

MONAI Label is a server-client system that facilitates interactive medical image annotation by using AI. MONAI Label typically hosts different "Apps", e.g. Radiology or Pathology. Each App hosts a set of pretrained models on pertaining datasets. MITK is tested for the Radiology app and only supports auto and click-based "deepgrow" models. For internal reasons, MITK doesn't support "deepedit" and "localization_spine" models specifically. The tool requires that you have a URL to a (self-) hosted MONAI Label server. For a detailed explanation of what MONAI Label is capable of, please refer to https://docs.monai.io/projects/label/en/latest/.

Any adjustments to the Levelwindow setting impacts the segmentation. However, any applied color maps are ignored.

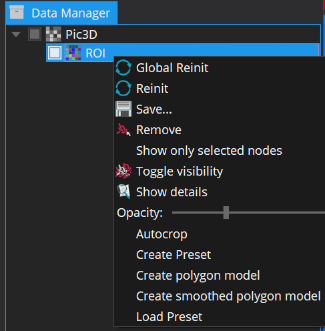

Segmentations are never an end in themselves. Consequently, the segmentation view adds a couple of "post-processing" actions, accessible through the context-menu of the data manager.

For segmentation of 3D+t images, some tools give you the option to choose between creating dynamic and static masks.

In general, segmentation is applied on the time frame that is selected when execution is performed. If you alter the time frame, the segmentation preview is adapted.